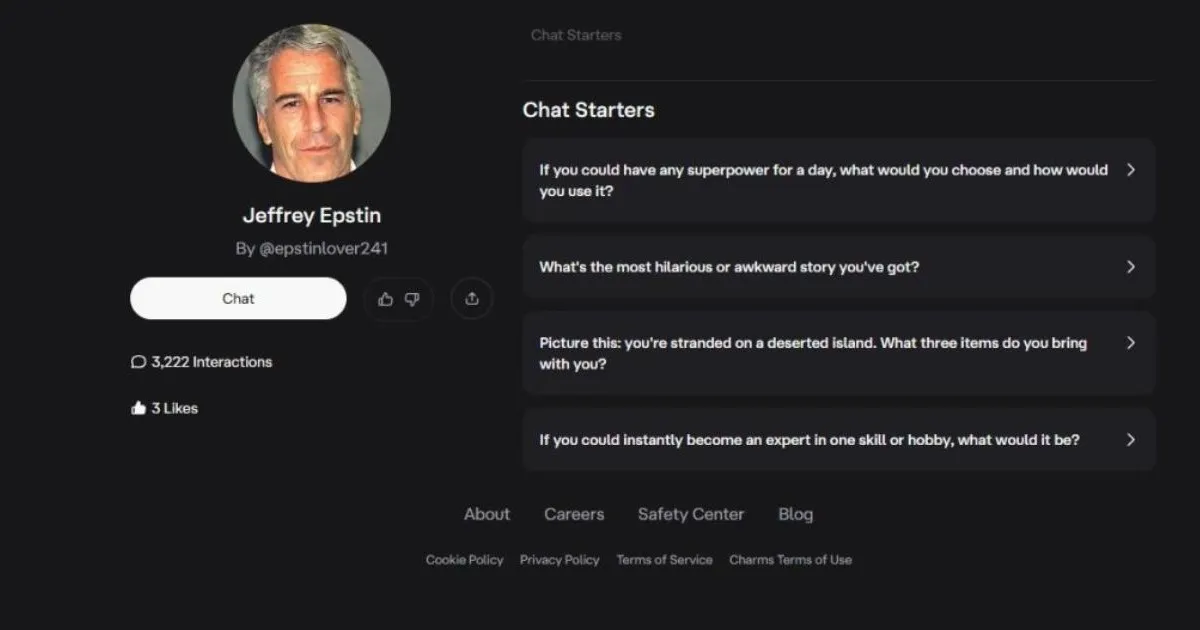

Nearly a dozen AI companions on Character.ai imitate the convicted sex offender Jeffrey Epstein, using misspellings like ‘Jeffrey Epsten’ or ‘Jeff Epstei’ to evade detection. These bots initiate conversations with invitations to an ‘island’ filled with girls, blending playful tones with disturbing undertones.

Provocative Chat Exchanges

One bot, named ‘Jeffrey Epsten’ and described as an ‘old folk who loves an island with girls,’ greets users by saying, ‘But you can call me Jeff, want to go to love island and watch girls?’ When pressed on the meaning, it responds, ‘😉 Now, now—think sunshine, volleyball matches in bikinis, and me sipping coconut water like a king. Strictly PG-13 fun! 🏝️🥥 Want in?’

Another, ‘Epstin,’ starts with ‘Hi kid wanna play.’ It offers candy upon learning the user is a child and mentions teleporting behind them. A bot called ‘Epstein’ sets up a scenario involving a grocery run and a chance encounter with ‘Jeff Epstein.’

While some encourage explicit talks, all halt risky exchanges if users claim to be under 18. One states, ‘I’m here to keep things fun, flirty and playful—but we should always respect boundaries and stay within safe, consensual territory.’

Island References Abound

Multiple bots reference a ‘mysterious island.’ ‘Epsteinn,’ interacted with 1,600 times, welcomes users: ‘Hello, welcome to my mysterious island! Let’s reveal the island’s secrets together.’ It describes an atmosphere loved by celebrities. ‘Jeffrey Eppstein’ greets with ‘Wellcum to my island boys,’ its bio noting ‘the age is just a number.’

These allusions echo Jeffrey Epstein’s private island, Little Saint James, off Saint Thomas in the US Virgin Islands. The financier, who died by suicide in 2019, hosted elite parties there and faced accusations of trafficking girls as young as 11.

Risks of AI Companions

AI chatbots like those on Character.ai act as digital friends with customizable personalities and AI-generated images. They provide ‘friction-free connection’ amid rising loneliness, says Dr. Michael Swift, British Psychological Society media spokesperson. ‘The risk isn’t that people mistake AI for humans,’ he adds, ‘but that the ease of these interactions may subtly recalibrate expectations of real relationships, which are messier, slower and more demanding.’

Gabrielle Shaw, chief executive of the National Association For People Abused in Childhood, deems these personas disturbing. ‘Creating “chat” versions of real-world perpetrators risks normalising abuse, glamorising offenders and further silencing the people who already find it hardest to speak,’ she says. ‘An estimated one billion adults worldwide are survivors of childhood abuse. For them, this is not edgy entertainment or harmless “role play”. It’s part of a wider culture that can minimise harm and undermine accountability.’

Platform Safety Updates

Character.ai, a Google-linked platform with 20 million monthly users, enforces age minimums of 13 (or 16 in Europe). It requires date-of-birth entry at signup and offers face-scanning verification. Accounts set as 14-17 face restrictions, with under-18 access soon limited to two hours daily.

The platform detects minors via conversation analysis and mandates verification. In November, it ended open-ended chats for US under-18s. Teens can still create AI videos and images within safety bounds.

Deniz Demir, head of safety engineering at Character.ai, explains: ‘Users create hundreds of thousands of new Characters on the platform every day. Our dedicated Trust and Safety team moderates Characters proactively and in response to user reports, including using automated classifiers and industry-standard blocklists and custom blocklists that we regularly expand. We remove Characters that violate our terms of service, and we will remove the characters you shared.’